In the digital landscape, data isn’t just a resource; it’s the foundation for informed decision-making, innovation, and business growth. To harness its power, organizations turn to advanced data analytics tools.

We’ll dive into five prominent data analytics tools:-

- Apache Hadoop

- Apache Spark

- Google Cloud Dataflow

- Microsoft Azure Stream Analytics

- AWS EMR

1. Apache Hadoop: Taming the Data Deluge with Scalability

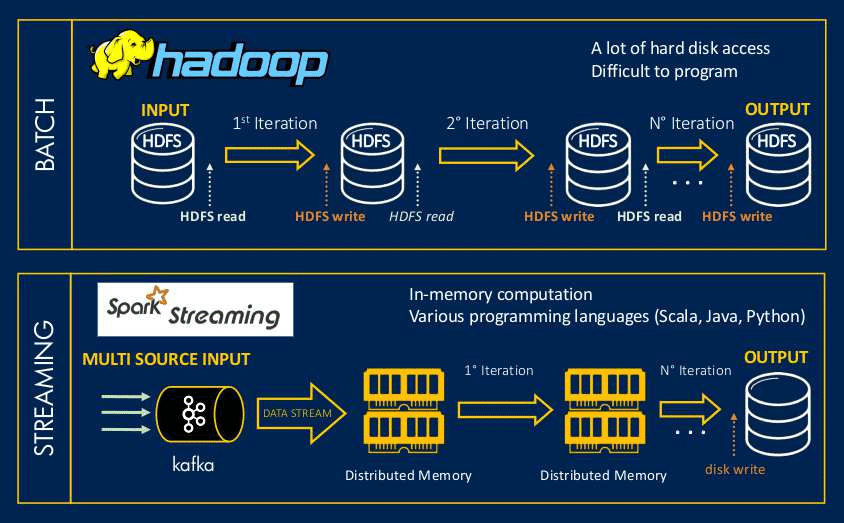

Apache Hadoop is an open-source framework for storing and processing large amounts of data. It is designed to be scalable and fault-tolerant, making it ideal for big data applications. Hadoop uses a distributed file system called HDFS to store data, and a MapReduce programming model to process data. Hadoop’s strength lies in its ability to process massive datasets efficiently, uncovering trends and patterns that might have otherwise gone unnoticed.

2. Apache Spark: Accelerating Analytics with In-Memory Processing

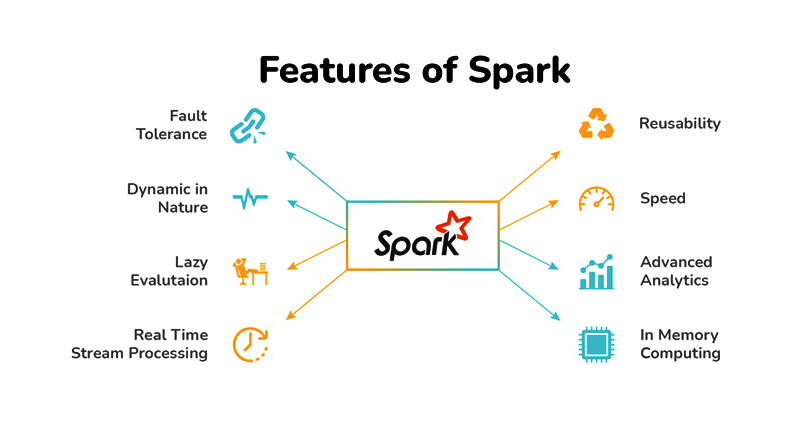

Apache Spark is an open-source cluster computing framework that can be used for both batch and streaming data processing. It is faster than Hadoop for many types of data processing tasks, and it can also be used for machine learning and artificial intelligence applications. Spark’s unified platform streamlines the development of complex data pipelines, making it a popular choice for organizations seeking faster and more diverse analytics.

3. Google Cloud Dataflow: Crafting Insights with Managed Data Processing

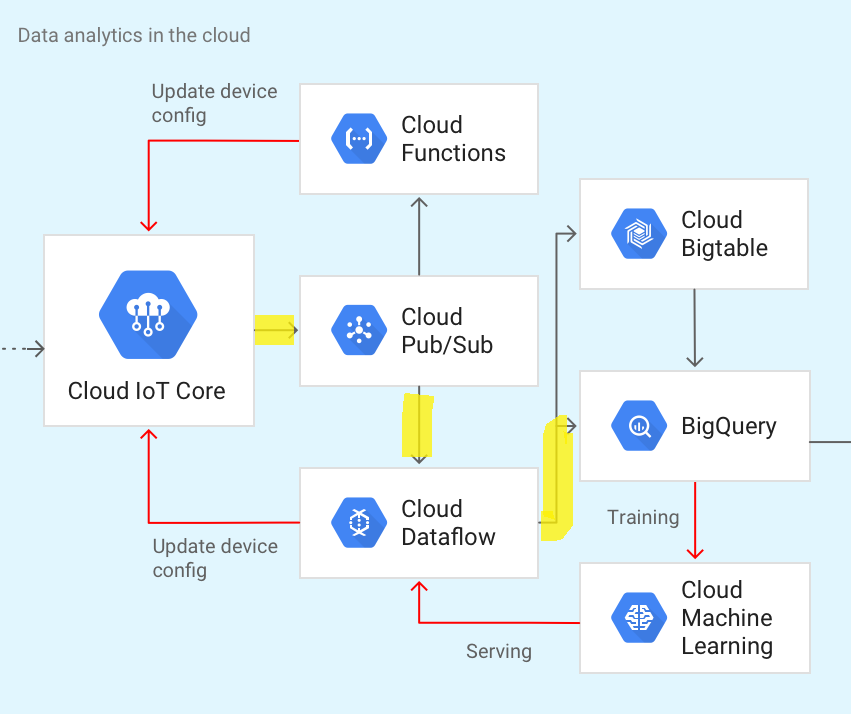

Google Cloud Dataflow is a managed service that makes it easy to build and run Apache Spark jobs on Google Cloud Platform. It provides a high-level abstraction for Spark, making it easier to use and manage. Dataflow’s serverless architecture simplifies infrastructure management, letting data engineers focus on building analytics pipelines. Its integration with Google Cloud’s ecosystem enhances scalability and enables seamless transition from development to production environments.

4. Microsoft Azure Stream Analytics: Real-Time Insights from Streaming Data

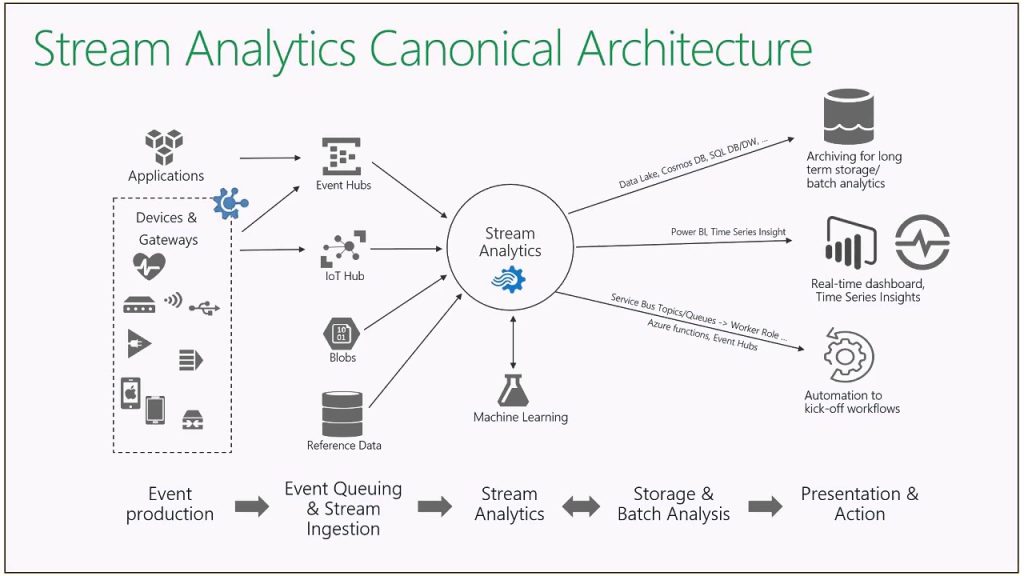

Microsoft Azure Stream Analytics is a fully managed service that makes it easy to process and analyze streaming data in real time. It can be used to build a variety of streaming applications, such as fraud detection, anomaly detection, and predictive maintenance. Stream Analytics’ integration with Azure services enhances its versatility, allowing data engineers to easily incorporate machine learning and advanced analytics into their pipelines. This tool is particularly valuable for IoT applications, fraud detection, and monitoring.

5. AWS EMR: Flexibility and Scalability for Data Processing

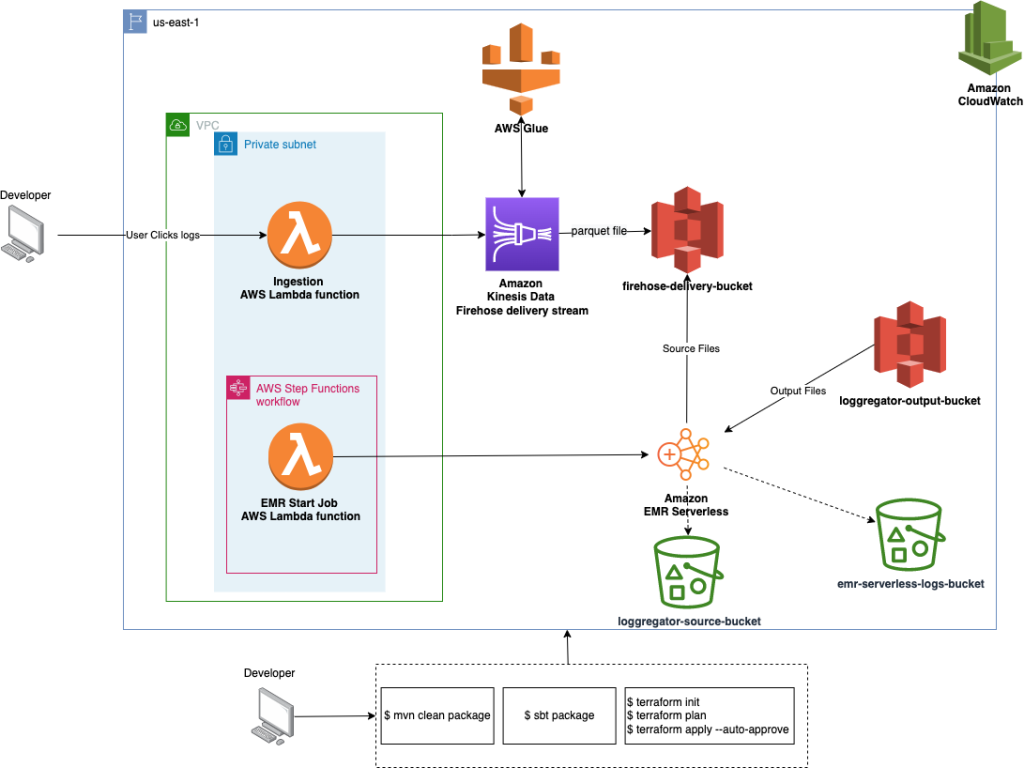

AWS EMR is a managed service that makes it easy to set up, operate, and scale Apache Hadoop and Apache Spark clusters on Amazon Web Services. It provides a variety of features, such as automated provisioning, monitoring, and scaling, that make it a good choice for big data applications. It seamlessly integrates with other AWS services, making it an attractive choice for organizations already invested in the AWS ecosystem. EMR’s managed service approach handles infrastructure management, allowing users to focus on analyzing data rather than managing clusters.

Conclusion:

The realm of data analytics is rich and diverse, and these five tools exemplify the myriad ways organizations can derive insights from their data. From handling massive datasets to real-time streaming analytics, these tools cater to various needs and use cases. The key is to select the right tool based on your organization’s data processing requirements, existing infrastructure, and desired outcomes.