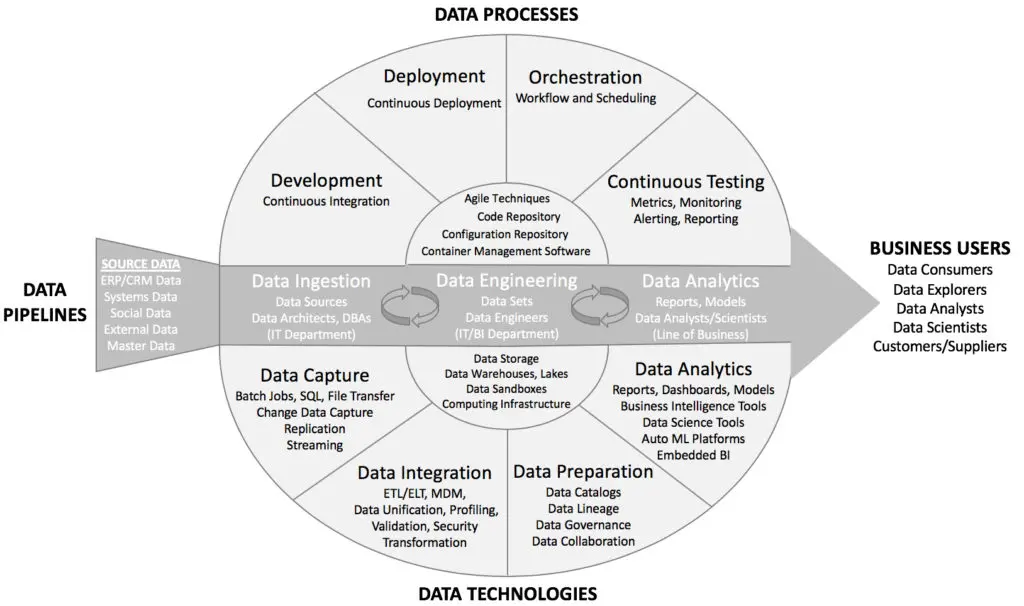

DataOps is a modern approach to data management that aims to streamline and automate the process of moving data from source to consumption. It brings together people, processes, and technologies to improve data reliability, quality, and time-to-market.

What is DataOps?

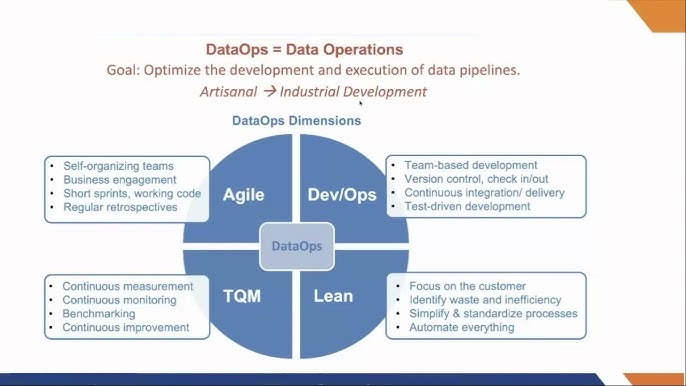

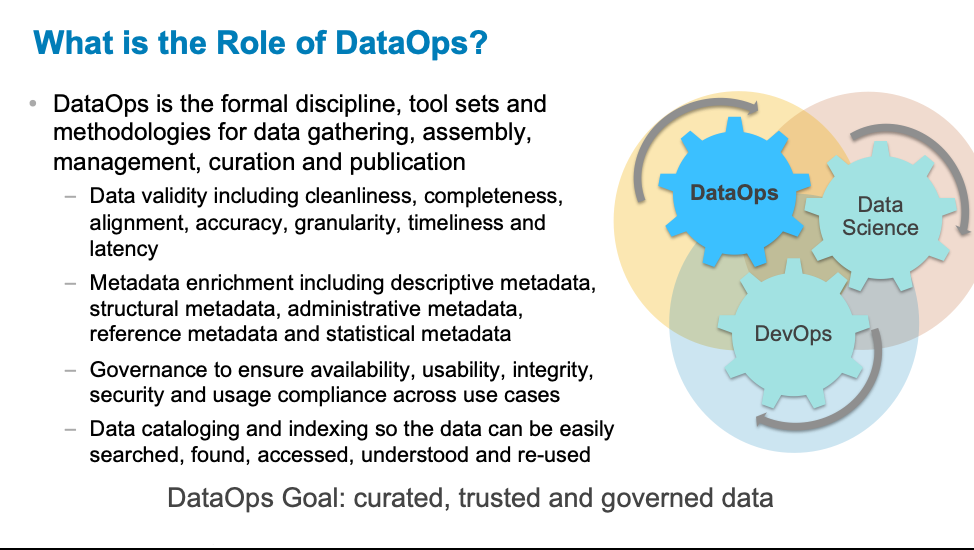

DataOps is a set of practices, tools, and frameworks aimed at improving collaboration between data engineers, data scientists, and other stakeholders involved in the data lifecycle. The goal is to automate and streamline data processes, much like DevOps does for software development. By implementing DataOps, organizations can ensure that data is continuously available, reliable, and ready to drive valuable insights.

1. Agile Data Development

Agile principles, widely adopted in software development, form the foundation of DataOps. Agile data development emphasizes iterative, incremental, and collaborative approaches to building data pipelines and analytics solutions. The flexibility of agile enables teams to rapidly respond to changing requirements, improve efficiency, and reduce time-to-insight.

Key Elements:

- Continuous feedback loops

- Cross-functional collaboration

- Short development cycles and sprints

- Adaptability to change in data needs

2. Data Pipeline Automation

Automation is at the heart of DataOps. Data pipeline automation ensures that the processes for collecting, processing, and delivering data are fast, repeatable, and reliable. Automated pipelines reduce the risk of human error and allow data teams to focus on more strategic tasks.

Key Elements:

- Automated ETL (Extract, Transform, Load) processes

- Continuous integration and delivery (CI/CD) for data

- Workflow orchestration tools like Apache Airflow, Prefect, or Luigi

- Automated testing for data validation and integrity

3. Monitoring and Observability

To maintain the quality and reliability of data, continuous monitoring is essential. DataOps encourages the use of observability tools to track data pipeline performance, identify bottlenecks, and detect issues in real-time. This proactive approach helps teams resolve problems before they impact downstream analytics and business decisions.

Key Elements:

- Real-time data monitoring

- Performance metrics and alerts

- Data lineage tracking

- Tools like Grafana, Prometheus, and Datadog for monitoring data health

4. Collaboration and Communication

Collaboration between data engineers, data scientists, business analysts, and other stakeholders is critical for success in DataOps. By breaking down silos, DataOps fosters an environment where teams work together seamlessly. Tools that facilitate collaboration, such as version control systems and communication platforms, play a crucial role.

Key Elements:

- Version control for data pipelines (e.g., Git)

- Collaborative workspaces (e.g., Slack, Microsoft Teams, Jira)

- Shared documentation and data governance frameworks

- Data cataloging tools for better transparency and understanding of data assets

5. Data Quality and Governance

Ensuring high data quality is essential for building trust in data-driven decisions. DataOps integrates robust data quality and governance frameworks to ensure that data is accurate, consistent, and compliant with regulations. This involves setting up automated checks and rules to maintain the integrity of data at every stage of its lifecycle.

Key Elements:

- Data quality checks and validation

- Metadata management

- Compliance with data privacy regulations (e.g., GDPR, CCPA)

- Tools like Great Expectations and Talend for data quality management

6. Scalability and Flexibility

As organizations grow, their data needs will evolve, requiring systems that can scale accordingly. DataOps emphasizes building flexible, scalable data architectures that can accommodate increased data volumes, new data sources, and changing analytics requirements without significant disruption.

Key Elements:

- Cloud-based infrastructure for scalability (e.g., AWS, Google Cloud, Azure)

- Containerization and orchestration (e.g., Docker, Kubernetes)

- Modular and scalable data architecture design

7. Security and Compliance

With the rise in data breaches and growing concerns around data privacy, DataOps prioritizes security and compliance at every stage of the data lifecycle. This means integrating security practices into data pipelines, ensuring that sensitive data is protected, and complying with relevant regulations.

Key Elements:

- Data encryption and access controls

- Regular security audits

- Compliance with regulations like HIPAA, GDPR, and SOC 2

- Role-based access controls (RBAC) and identity management