DataOps is emerging as a transformative approach, integrating Agile methodologies and DevOps principles into data management. This blog explores how DataOps can accelerate data analytics, improve data quality, and create a more agile analytics ecosystem.

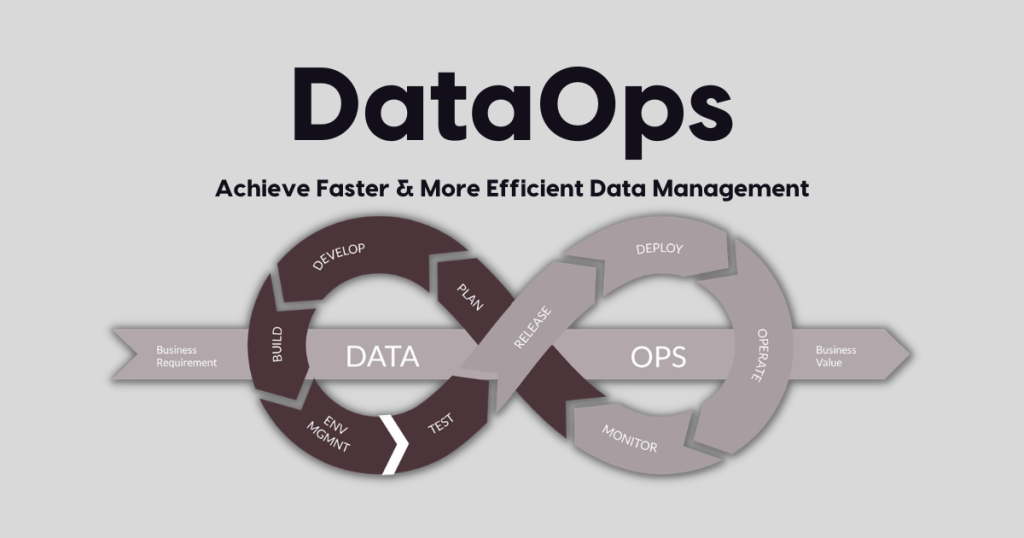

1. What is DataOps?

DataOps, short for Data Operations, is a framework that applies DevOps principles to data analytics, promoting collaboration, automation, and efficiency. This approach streamlines the entire data lifecycle, from ingestion and transformation to analysis and reporting.

- Core Principles: DataOps emphasizes Agile practices, continuous integration and delivery (CI/CD), and automation, making data processes faster and more reliable.

2. Why Use DataOps in Data Analytics?

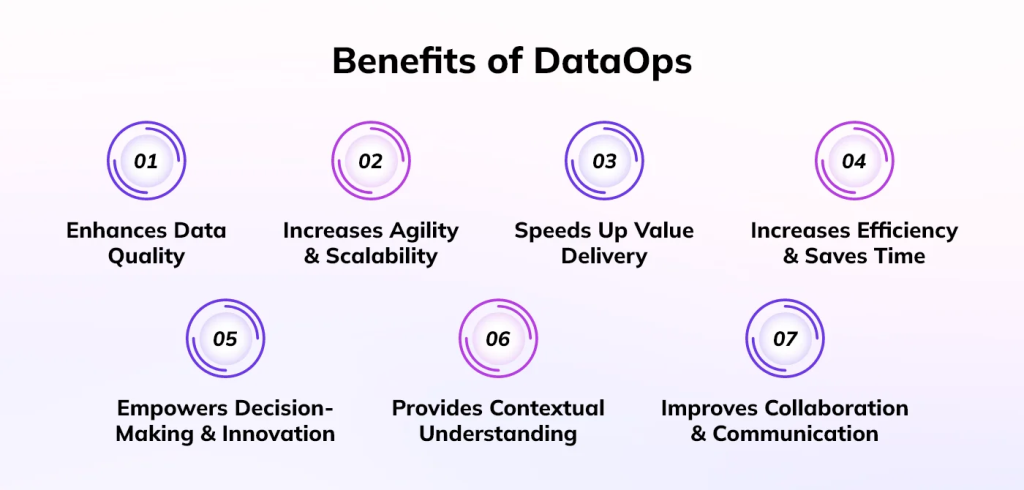

DataOps provides significant benefits to data analytics, making data operations more responsive and scalable. Here’s why DataOps is becoming essential:

- Improved Data Quality: Automated validation and testing within DataOps pipelines reduce errors and improve data accuracy, essential for trustworthy analytics.

- Enhanced Collaboration: DataOps fosters collaboration across teams, ensuring data engineering, data science, and business teams work together seamlessly.

- Faster Data Delivery: Automation and continuous integration mean data can flow faster through the pipeline, enabling real-time insights.

3. How DataOps Accelerates Data Analytics

a. Automation of Data Pipelines

DataOps uses automated workflows to handle data ingestion, transformation, and loading. This removes manual tasks, reduces bottlenecks, and ensures data is consistently prepared for analysis.

- Data Ingestion Automation: Tools like Apache NiFi and Fivetran automate data ingestion from multiple sources, accelerating the process.

- Transformation Automation: DataOps tools support automated transformations, ensuring data is in the right format for analytics with minimal delay.

b. Continuous Testing and Validation

In traditional data workflows, data validation often occurs late in the process, causing delays if issues arise. DataOps introduces testing and validation throughout the data pipeline, catching errors earlier.

- Schema Validation: Automated checks verify that data conforms to expected schemas, catching inconsistencies before they disrupt analytics.

- Data Quality Checks: Continuous data quality tests ensure that only reliable, clean data reaches the analytics team.

c. Real-Time Monitoring and Alerts

DataOps introduces real-time monitoring, allowing teams to track data as it flows through the pipeline. If an issue occurs, alerts notify the team, allowing for immediate resolution.

- End-to-End Monitoring: DataOps tools provide visibility into the entire data pipeline, helping teams monitor data freshness, accuracy, and performance.

- Proactive Issue Resolution: Real-time alerts enable teams to address issues quickly, ensuring consistent data flow and reducing downtime.

d. Scalability and Flexibility

As data demands grow, DataOps frameworks make it easier to scale data processes, allowing organizations to handle larger data volumes and adapt to changing business needs.

- Elastic Scaling: DataOps tools support scalable architecture, enabling data pipelines to adjust dynamically to workload increases.

- Adaptable Pipelines: By following Agile principles, DataOps makes it easy to modify data pipelines as new sources or requirements emerge.

4. Implementing DataOps for Reliable Data Analytics

To fully leverage DataOps in analytics, organizations need a thoughtful implementation strategy:

- Choose the Right Tools: DataOps tools like dbt, Airflow, and DataKitchen support automation and real-time monitoring, enabling efficient data pipelines.

- Foster Cross-Functional Collaboration: Bring data engineers, analysts, and business stakeholders together to align DataOps workflows with business objectives.

- Emphasize Automation: Automate every possible step in data pipelines to ensure consistency and reduce manual errors, focusing on data quality and reliability.

5. Conclusion: DataOps as a Foundation for Reliable Data Analytics

DataOps is revolutionizing data analytics by making data pipelines faster, more reliable, and scalable. By automating data workflows, implementing continuous testing, and ensuring real-time monitoring, DataOps enhances data quality and accelerates time to insight. For organizations looking to stay competitive in the analytics-driven world, adopting DataOps is a strategic move toward faster, more reliable data analytics.