DataOps, or Data Operations, optimizes data workflows to improve data quality, reduce delivery time, and foster collaboration across teams. Measuring the Return on Investment (ROI) for DataOps is essential to ensure that these benefits translate into tangible business value. In this post, we’ll explore the key metrics and KPIs for evaluating DataOps success, helping organizations track their progress and maximize their DataOps ROI.

1. Why Measure DataOps ROI?

Understanding the ROI of DataOps enables organizations to:

- Justify Investments: Demonstrate the value of DataOps investments by quantifying improvements in efficiency, quality, and speed.

- Optimize Processes: Identify areas where DataOps is adding value and where further optimization is needed.

- Align Data Goals with Business Goals: Ensure that DataOps initiatives support larger business objectives like faster decision-making and data-driven innovation.

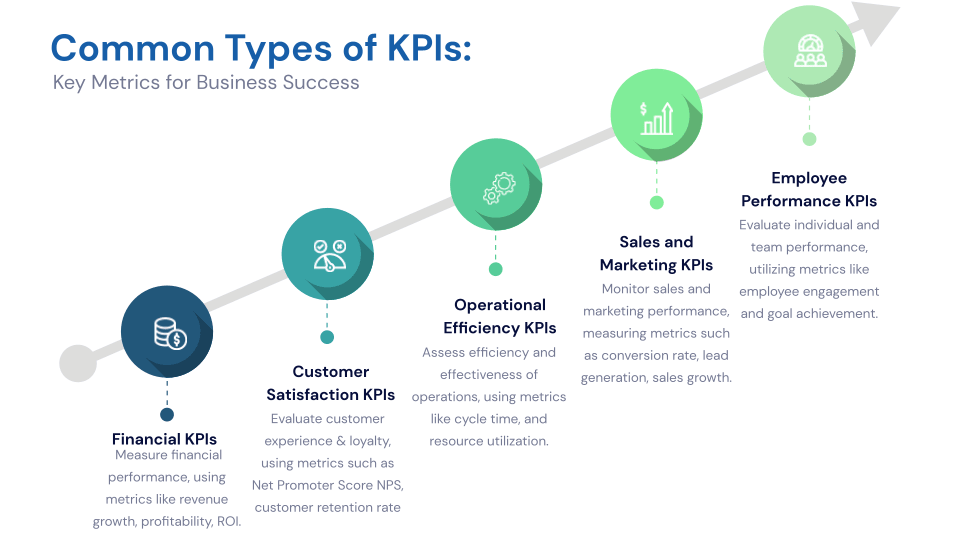

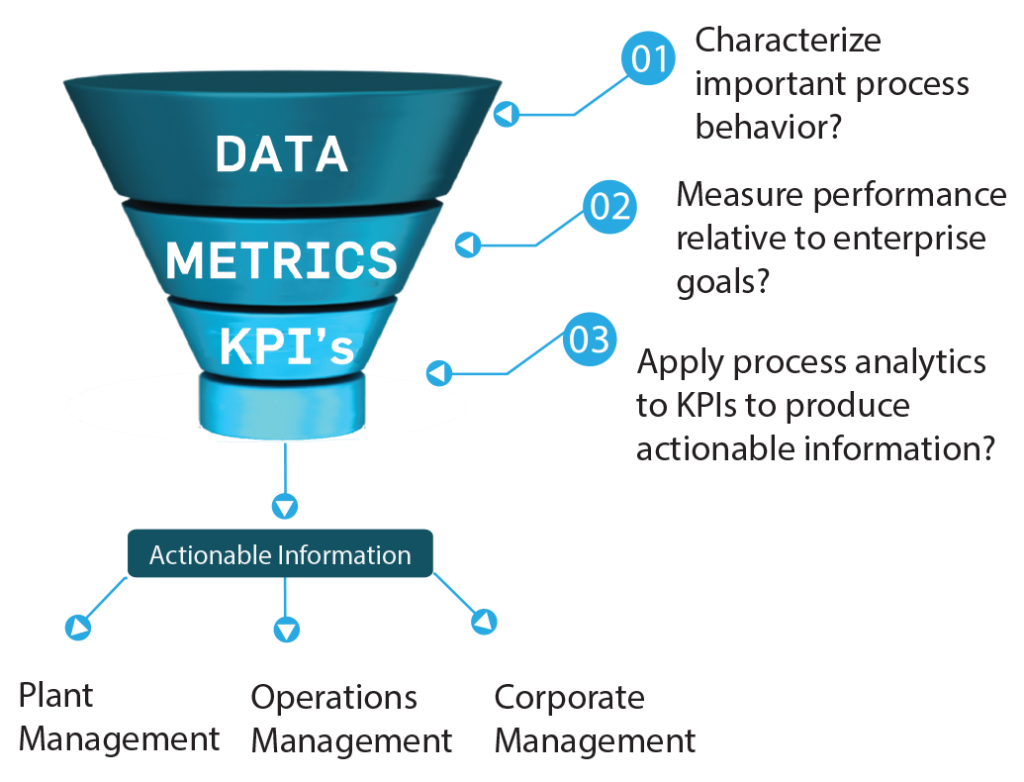

2. Key Metrics and KPIs for Measuring DataOps ROI

To measure DataOps ROI effectively, focus on these essential metrics:

a. Pipeline Efficiency

- Pipeline Throughput: Measures the volume of data processed over a given period, indicating how efficiently DataOps workflows handle data.

- Pipeline Latency: Tracks the time it takes for data to move through the pipeline from ingestion to delivery. Reduced latency suggests an optimized pipeline with minimal bottlenecks.

- Time-to-Insight: The time from data ingestion to actionable insights. A shorter time-to-insight reflects faster data processing and decision-making, enhancing DataOps value.

b. Data Quality Metrics

- Error Rate: Measures the percentage of errors in data pipelines. A lower error rate indicates effective quality checks and reliable data processes.

- Data Completeness: Tracks how complete data is when it reaches downstream systems, ensuring no critical information is lost in transit.

- Data Accuracy: Monitors the correctness of data, which directly impacts the reliability of analytics and decision-making.

c. Cost Metrics

- Cost Per Pipeline: Calculates the cost associated with building and maintaining each data pipeline. Lower costs indicate more efficient use of resources and optimized pipelines.

- Cost Savings from Automation: Quantifies cost reductions achieved by automating manual data tasks. Automation minimizes labor-intensive processes, freeing up resources for more strategic work.

- Cost of Downtime: Tracks the financial impact of pipeline downtime or failures. A reduction in downtime costs signals increased pipeline reliability.

d. Productivity and Collaboration

- Team Productivity: Measures the output per team member in the DataOps team. An increase in productivity reflects streamlined processes and effective team collaboration.

- Cross-Functional Collaboration: Tracks the frequency and quality of collaboration across data, engineering, and business teams. Effective collaboration often leads to faster issue resolution and higher-quality data.

- Issue Resolution Time: Measures the time taken to detect, diagnose, and fix pipeline issues. Faster resolution times reflect proactive monitoring and effective response strategies.

e. Agility and Flexibility

- Deployment Frequency: Tracks how often data pipelines are deployed, updated, or improved. Higher deployment frequency indicates a flexible, Agile-driven DataOps environment.

- Change Lead Time: Measures the time between identifying the need for a change in data processes and implementing it. Shorter lead times reflect agility in adapting to new requirements.

- Scaling Efficiency: Tracks how easily pipelines handle increased data volumes or new sources. Efficient scaling demonstrates a robust DataOps framework capable of supporting growth.

f. Customer Satisfaction and Impact

- User Satisfaction: Collect feedback from data users, such as analysts and business teams, on the quality, reliability, and accessibility of data provided by DataOps.

- Impact on Business Decisions: Measure the effectiveness of DataOps-driven insights by tracking their influence on key business decisions, such as time-to-market, customer insights, or operational improvements.

- Reduced Time-to-Market: Quantify how DataOps accelerates product or feature launches by providing faster, more reliable data insights.

3. Calculating the ROI of DataOps

To determine the ROI of DataOps, use the following formula:

DataOps ROI (%) = [(Total Benefits – Total Costs) / Total Costs] × 100

- Total Benefits: Sum up the financial impact of improvements, such as increased productivity, cost savings from automation, and enhanced decision-making.

- Total Costs: Include costs associated with DataOps tools, training, pipeline development, and ongoing maintenance.

4. Best Practices for Maximizing DataOps ROI

a. Automate Data Quality Checks

- Automate Validation and Cleansing: Incorporate automated quality checks and data cleansing to reduce manual tasks and ensure data reliability.

- Use Real-Time Monitoring: Implement real-time data monitoring to detect quality issues early and prevent delays.

b. Focus on Collaboration

- Encourage Cross-Functional Teams: Break down silos by fostering collaboration among data engineers, scientists, and business analysts.

- Align Data Goals with Business Needs: Regularly review DataOps practices to ensure alignment with changing business objectives.

c. Optimize Pipeline Performance

- Regularly Assess and Refine Pipelines: Conduct performance audits and refine pipeline configurations for improved throughput and reduced latency.

- Invest in Scalable Infrastructure: Choose scalable infrastructure options (e.g., cloud-based services) that support efficient growth as data demands increase.

d. Continuously Improve Through Feedback

- Gather User Feedback: Collect feedback from data consumers to understand their needs and address any gaps in data accessibility, quality, or usability.

- Iterate Based on KPIs: Use KPI results to make continuous adjustments to DataOps processes, ensuring they are always evolving and improving.