Data integration tools are used to combine, cleanse, and transform data from various sources into a unified format, making it suitable for analysis, reporting, and other business purposes.

Top 5 Use Cases of Data Integration Tools:

- Data Warehousing: Integrating data from multiple sources into a data warehouse for centralized storage and analysis.

- Business Intelligence: Providing clean and organized data to business intelligence tools for generating insights and reports.

- Data Migration: Moving data from one system to another during system upgrades or migrations.

- Real-time Data Streaming: Processing and integrating data streams in real-time for immediate analysis.

- Data Synchronization: Ensuring data consistency across different systems or databases.

Features of Data Integration Tools:

- Connectivity to various data sources (databases, files, cloud services, etc.).

- Data transformation and mapping capabilities.

- Workflow automation and scheduling.

- Error handling and logging for data integrity.

- Scalability to handle large volumes of data.

- Support for real-time and batch data integration.

- Monitoring and performance optimization.

List of Data Integration Tools

1. Apache NiFi

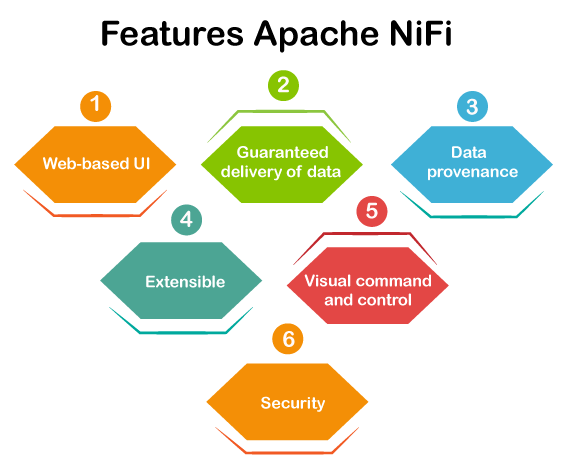

Apache NiFi is an open-source data integration tool designed to automate the flow of data between various systems, applications, and data sources. It provides a web-based user interface for designing, managing, and monitoring data flows (data pipelines) using a visual drag-and-drop interface. NiFi is developed by the Apache Software Foundation and is part of the Apache DataFlow project.

Data Integration: Talend allows users to design and execute data integration workflows, commonly known as Jobs, using a graphical drag-and-drop interface. It supports a wide range of data sources and targets, including databases, cloud storage, flat files, APIs, and more.

Data Flow Visualization: NiFi offers a user-friendly web-based UI that allows users to design data flows by simply dragging and dropping processors, connections, and other components on the canvas. This visual representation makes it easier to understand and manage complex data flows.

Data Routing and Transformation: NiFi supports routing, filtering, and transformation of data. It can handle data in various formats, such as XML, JSON, CSV, Avro, etc., and transform it as required using processors.

Scalability: NiFi is built to handle high volumes of data and can be scaled horizontally to meet the growing demands of data processing.

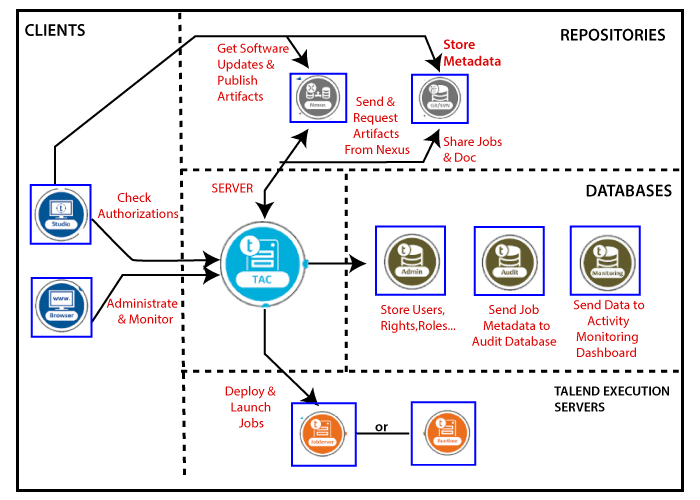

2. Talend

Talend is a popular open-source data integration and ETL (Extract, Transform, Load) tool that enables organizations to connect, access, transform, and integrate data from various sources. It provides a unified platform for data integration, data quality, data governance, data preparation, and data stewardship. Talend was founded in 2005 and has since grown to become a leading player in the data integration and management space.

Key Features of Talend:

Data Integration: Talend allows users to design and execute data integration workflows, commonly known as Jobs, using a graphical drag-and-drop interface. It supports a wide range of data sources and targets, including databases, cloud storage, flat files, APIs, and more.

ETL and Data Transformation: Talend provides a rich set of transformation components to manipulate and cleanse data during the ETL process. These components allow users to perform operations such as filtering, sorting, aggregating, merging, and data enrichment.

Real-Time Data Integration: Talend supports real-time data integration, allowing users to process streaming data and respond to events in real-time.

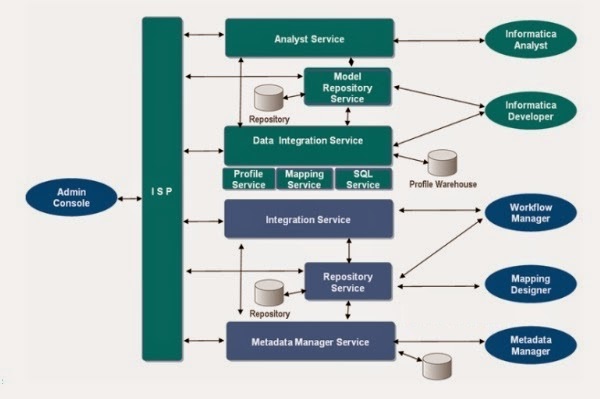

3. Informatica

Informatica is a leading data integration and data management software suite developed by Informatica LLC. It is widely used by organizations to integrate, cleanse, transform, and manage data from various sources across the enterprise. Informatica offers a comprehensive set of tools and solutions to address data integration, data quality, data governance, data analytics, and more.

Key Features of Informatica:

Data Integration: Informatica provides a powerful data integration platform that enables seamless data movement between different systems, applications, and databases. It supports batch processing as well as real-time data integration.

ETL and Data Transformation: Informatica PowerCenter, one of the flagship products, offers robust ETL (Extract, Transform, Load) capabilities. It allows users to design and execute complex data transformation workflows to cleanse, enrich, and prepare data for further analysis and reporting.

Data Quality and Profiling: Informatica Data Quality (IDQ) is a component that helps identify and resolve data quality issues. It provides data profiling capabilities to analyze the quality and completeness of data, enabling organizations to maintain high data accuracy.

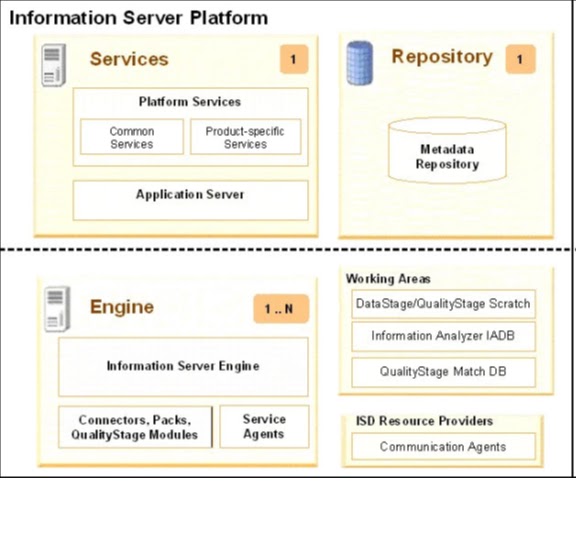

4. IBM InfoSphere DataStage

IBM InfoSphere DataStage is a data integration and ETL (Extract, Transform, Load) tool developed by IBM. It is designed to help organizations extract data from various sources, transform it, and load it into target systems, data warehouses, and data marts. InfoSphere DataStage is part of the IBM InfoSphere Information Server suite, which provides a comprehensive platform for data integration, data quality, data governance, and data analytics.

Key Features of IBM InfoSphere DataStage:

Data Integration and ETL: InfoSphere DataStage allows users to design and build data integration workflows (jobs) using a graphical interface. These jobs consist of data extraction from source systems, data transformations, data enrichment, and data loading into target systems.

Parallel Processing: DataStage leverages parallel processing to optimize performance and scalability. It can execute data integration jobs in parallel, enabling faster processing of large volumes of data.

Data Transformation: InfoSphere DataStage offers a wide range of built-in transformation functions and operators to manipulate and cleanse data during the ETL process. It supports data type conversions, aggregations, lookups, data filtering, and more.

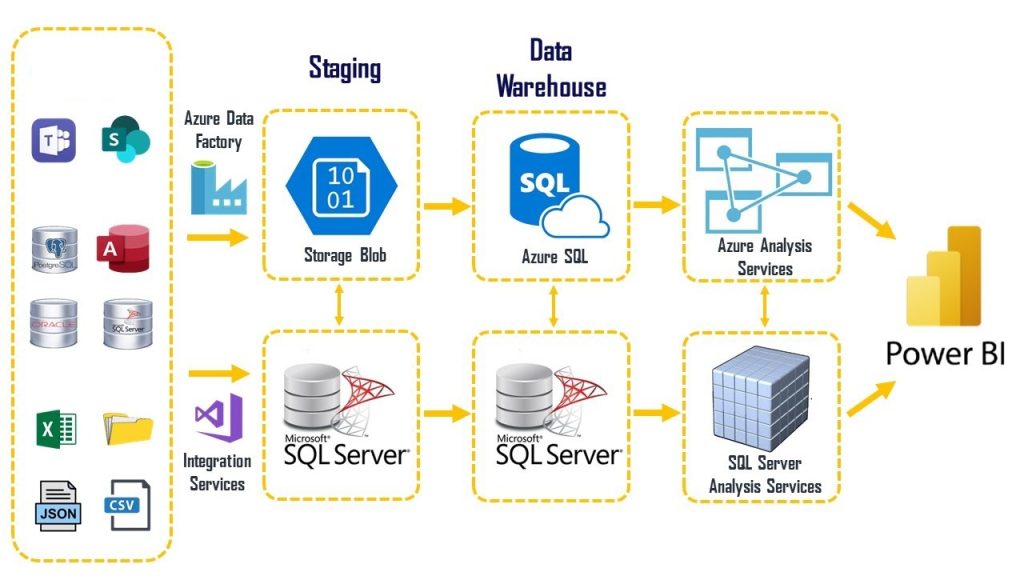

5. Microsoft SQL Server Integration Services (SSIS)

Microsoft SQL Server Integration Services (SSIS) is a data integration and ETL (Extract, Transform, Load) tool provided by Microsoft as part of SQL Server. It is designed to facilitate the creation, execution, and management of data integration workflows for extracting, transforming, and loading data between various data sources and destinations. SSIS is widely used for data warehousing, business intelligence, and data migration projects.

Key Features of Microsoft SQL Server Integration Services (SSIS):

Visual Design Environment: SSIS provides a visual development environment (SSIS Designer) within SQL Server Data Tools (SSDT) or SQL Server Management Studio (SSMS). Users can design data integration workflows by dragging and dropping components onto the design surface.

Connectivity and Data Sources: SSIS supports various data sources, including relational databases (SQL Server, Oracle, MySQL, etc.), flat files, Excel spreadsheets, web services, and more. It also provides native connectivity to other Microsoft products like SharePoint, Dynamics, and Excel.

Data Transformations: SSIS includes a rich set of data transformation tasks such as data conversions, lookups, aggregations, pivots, sorting, merging, and conditional split. These transformations allow users to manipulate and cleanse data during the ETL process.

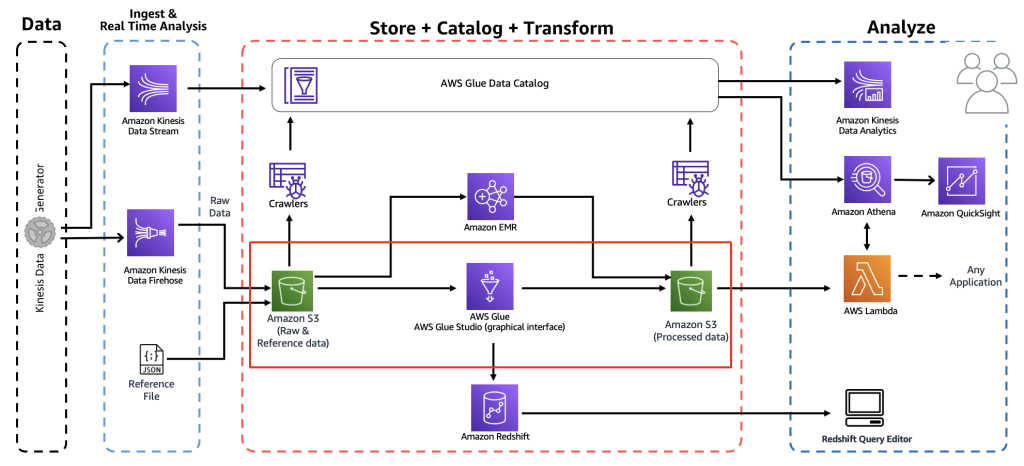

6. AWS Glue

AWS Glue is a fully managed extract, transform, and load (ETL) service provided by Amazon Web Services (AWS). It simplifies and automates the process of preparing and loading data from various sources into data lakes, data warehouses, and other data storage solutions. AWS Glue is designed to handle both batch and streaming data and is part of the AWS analytics and big data ecosystem.

Key Features of AWS Glue:

Data Catalog: AWS Glue provides a centralized metadata repository called the Data Catalog. It stores metadata about data sources, data targets, and transformations, making it easier to discover, manage, and track data assets.

Integration with AWS Services: AWS Glue seamlessly integrates with other AWS services like Amazon S3, Amazon Redshift, Amazon RDS, and AWS Lambda, allowing users to build end-to-end data processing pipelines.

Secure Data Transfer: AWS Glue ensures secure data transfer between data sources and targets by encrypting data in transit and at rest.